What happens when you insert the term “cloaking” into the Google search bar? You will be given a Google Knowledge result which first explains “cloaking” as a search engine technique that presents completely different content or URL to the user than to the search engine spider.

Cloaking is a search engine optimization (SEO) technique in which the content presented to the search engine spider is different from that presented to the user’s browser. This is done by delivering content based on the IP addresses or the User-Agent HTTP header of the user requesting the page. When a user is identified as a search engine spider, a server-side script delivers a different version of the web page, one that contains content not present on the visible page, or that is present but not searchable.

The purpose of cloaking is sometimes to deceive search engines so they display the page when it would not otherwise be displayed (black hat SEO). However, it can also be a functional (though antiquated) technique for informing search engines of content they would not otherwise be able to locate because it is embedded in non-textual containers such as video or certain Adobe Flash components. Since 2006, better methods of accessibility, including progressive enhancement, have been available, so cloaking is no longer necessary for regular SEO.

Cloaking is often used as a spamdexing technique to attempt to sway search engines into giving the site a higher ranking. By the same method, it can also be used to trick search engine users into visiting a site that is substantially different from the search engine description, including delivering pornographic content cloaked within non-pornographic search results.

spamdexing (also known as search engine spam, search engine poisoning, black-hat search engine optimization (SEO), search spam or web spam) is the deliberate manipulation of search engine indexes. It involves a number of methods, such as link building and repeating unrelated phrases, to manipulate the relevance or prominence of resources indexed, in a manner inconsistent with the purpose of the indexing system.

Cloaking is a form of the doorway page technique.

A similar technique is used on DMOZ web directory, but it differs in several ways from search engine cloaking:

- It is intended to fool human editors, rather than computer search engine spiders.

- The decision to cloak or not is often based upon the HTTP referrer, the user agent or the visitor’s IP; but more advanced techniques can be also based upon the client’s behaviour analysis after a few page requests: the raw quantity, the sorting of, and latency between subsequent HTTP requests sent to a website’s pages, plus the presence of a check for robots.txt file, are some of the parameters in which search engines spiders differ heavily from a natural user behaviour. The referrer tells the URL of the page on which a user clicked a link to get to the page. Some cloakers will give the fake page to anyone who comes from a web directory website, since directory editors will usually examine sites by clicking on links that appear on a directory web page. Other cloakers give the fake page to everyone except those coming from a major search engine; this makes it harder to detect cloaking, while not costing them many visitors, since most people find websites by using a search engine.

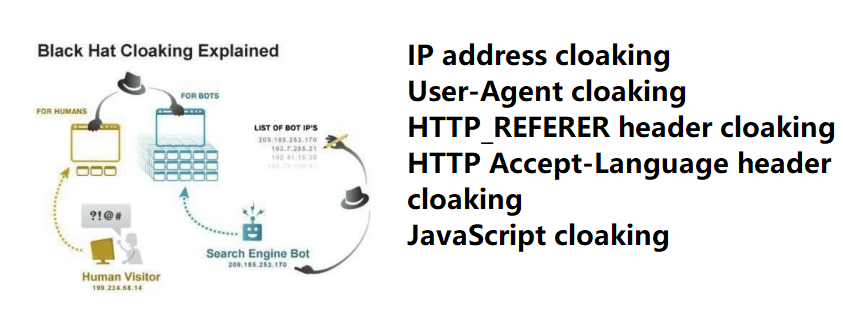

Following are the methods of Cloaking:

- IP address cloaking:

This method is based on determining the IP addresses of the incoming request. If IP address of a search engine is observed then the Cloaked version of web site is presented else other version of the web site is presented. - User-Agent cloaking:

In this the version of website presented depends on the User-Agent. If IP user agent is a search engine is observed then the cloaked version of web site is presented else other version of the web site is presented. - HTTP_REFERER header cloaking:

In this technique the HTTP_REFERER header of the requester is identified and based on that cloaked or uncloaked version of web site is presented. - HTTP Accept-Language header cloaking:

This technique identifies HTTP Accept-Language header of the user and accordingly the version of the web site is presented. That is if the HTTP Accept-Language header is of a search engine then observed then the Cloaked version of web site is presented else other version of the web site is presented. - JavaScript cloaking:

in these technique two different versions of web site is shown to the user i.e. to the user having Java script enabled get one version while the user with Java script disabled gets another version of web site.

Disadvantages of Cloaking:

- Loss of PA and DA

- Reduction of PA

- SERP’S (Search Engine Results Page) position loss

- Index banning or bar of website

Search Engines may follow following methods to detect Cloaking:

- Web spiders may visit an IP address which is not registered to their company.

- Non Spider user agent may visit the web site.

- Cache from different sources owned by a website are compared . ie. Main spider and page accelerator are used.

- Comparing cache of different sources and different websites.

- Algorithms are used to identify cloaking.

- Human editors to verify cloaking.

IP delivery can be considered a more benign variation of cloaking, where different content is served based upon the requester’s IP address. With cloaking, search engines and people never see the other’s pages, whereas, with other uses of IP delivery, both search engines and people can see the same pages. This technique is sometimes used by graphics-heavy sites that have little textual content for spiders to analyze.

One use of IP delivery is to determine the requestor’s location, and deliver content specifically written for that country. This isn’t necessarily cloaking. For instance, Google uses IP delivery for AdWords and AdSense advertising programs to target users in different geographic locations.

IP delivery is a crude and unreliable method of determining the language in which to provide content. Many countries and regions are multi-lingual, or the requestor may be a foreign national. A better method of content negotiation is to examine the client’s Accept-Language HTTP header.

As of 2006, many sites have taken up IP delivery to personalise content for their regular customers. Many of the top 1000 sites, including sites like Amazon (amazon.com), actively use IP delivery. None of these have been banned from search engines as their intent is not deceptive.