Technical SEO is probably one of the least covered topics, although you see it often discussed in segments. In case you don’t know, technical SEO is the side of SEO that focuses on optimising your site’s code and page speed, though it’s a bit more broad than that.

No-Indexing Category & Tag Pages

You can do this with Yoast’s SEO plugin. In my old SEO guide, I had recommended that you add an article to these pages in order to add some unique content to it. However, as pointed out WPRipper at the time, this seems to be a hit or miss (possibly a miss more often), and while it didn’t negatively affect my site, I went ahead and added no-index tags to my category and tag pages.

Increasing Site Speed

As far as page speed is concerned, Google has a tool called Pagespeed Insights that can help you find out what you need to do to your site.

Using More Efficient Codes

For example, those of you with child themes on WordPress may be using the @import instead of the wp_enqueue_style code, the latter of which will load the theme faster than the former.

Compress Images

Rather than use PNG images when you don’t need to, converting your images to Jpeg can reduce their file size quite a bit, thus making your page load faster.

Using A Responsive Design

Clean Up 404 Errors

As far as outbound links are concerned, all you have to do is find 404 errors on your site. You can do this by using a plugin called Broken Link Checker. https://wordpress.org/plugins/broken-link-checker/

For 404 internal links on your site, I’m on the fence about this. There are definitely times when you absolutely have to 301 redirect your old URLs to the new ones, such as when you switch from a HTTP site to HTTPS (or www to non-www, or vice versa). If you don’t do this, you’ll run into what’s referred to as canonicalisation issues, which is when Google indexes both versions of your site. This is actually a similar phenomenon to keyword cannibalisation.

However, when it comes to removing articles on your site (including the internal links), but not 301 redirecting them anywhere, it’s not necessary to do this.

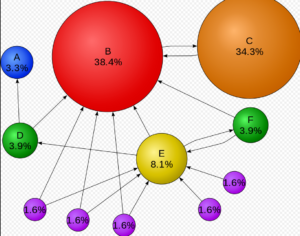

Then we come to the robots dot text file that lives in your websites root directory, and tells search engines what’s up. If you care at all about SEO you should know about this file. It allows you to tell the search engine to not crawl certain parts of your site that you don’t think are relevant to users. Let’s take a look at the WordPress robots dot text file. User agent at the beginning just means all crawlers should listen to the instructions. Then the word disallow tells crawlers to stay out, Allow is just explicit instructions for crawlers to look at pages, and then there are links to sitemaps for crawlers to find.